Autoregressive Renaissance in Neural PDE Solvers

Recent developments in the field of neural partial differential equation (PDE) solvers have placed a strong emphasis on neural operators. However, the paper Message Passing Neural PDE Solver by Brandstetter et al. published in ICLR 2022 revisits autoregressive models and designs a message passing graph neural network that is comparable with or outperforms both the state-of-the-art Fourier Neural Operator and traditional classical PDE solvers in its generalization capabilities and performance. This blog post delves into the key contributions of this work, exploring the strategies used to address the common problem of instability in autoregressive models and the design choices of the message passing graph neural network architecture.

Introduction

Improving PDE solvers has trickle down benefits to a vast range of other fields.

Partial differential equations (PDEs) play a crucial role in modeling complex systems and understanding how they change over time and in space.

They are used across physics and engineering, modeling a wide range of physical phenomena like heat transfer, sound waves, electromagnetism, and fluid dynamics, but they can also be used in finance to model the behavior of financial markets, in biology to model the spread of diseases, and in computer vision to model the processing of images.

They are particularly interesting in deep learning!

- Neural networks can be used to solve complex PDEs.

- Embedding knowledge of a PDE into a neural network can help it generalize better and/or use less data

- PDEs can help explain and/or interpret neural networks.

Despite their long history, dating back to equations first formalized by Euler over 250 years ago, finding numerical solutions to PDEs continues to be a challenging problem.

The recent advances in machine learning and artificial intelligence have opened up new possibilities for solving PDEs in a more efficient and accurate manner. These developments have the potential to revolutionize many fields, leading to a better understanding of complex systems and the ability to make more informed predictions about their behavior.

The background and problem set up precedes a brief look into classical and neural solvers, and finally discusses the message passing neural PDE solver (MP-PDE) introduced by Brandstetter et al.

Background

Let's brush up on the basics…

The notation and definitions provided match those in the paper for consistency, unless otherwise specified.

Ordinary differential equations (ODEs) describe how a function changes with respect to a single independent variable and its derivatives. In contrast, PDEs are mathematical equations that describe the behavior of a dependent variable as it changes with respect to multiple independent variables and their derivatives.

Formally, for one time dimension and possibly multiple spatial dimensions denoted by \(\textbf{x}=[x_{1},x_{2},x_{3},\text{...}]^{\top} \in \mathbb{X}\), a general (temporal) PDE may be written as

$$\partial_{t}\textbf{u}= F\left(t, \textbf{x}, \textbf{u},\partial_{\textbf{x}}\textbf{u},\partial_{\textbf{xx}}\textbf{u},\text{...}\right) \qquad (t,\mathbf{x}) \in [0,T] \times \mathbb{X}$$

- Initial condition: \(\mathbf{u}(0,\mathbf{x})=\mathbf{u}^{0}(\mathbf{x})\) for \(\mathbf{x} \in \mathbb{X}\)

- Boundary conditions: \(B[ \mathbf{u}](t,x)=0\) for \((t,\mathbf{x}) \in [0,T] \times \partial \mathbb{X}\)

Many equations are solutions to such PDEs alone. For example, the wave equation is given by \(\partial_{tt}u = \partial_{xx}u\). You will find that any function in the form \(u(x,t)=F(x-ct)+\) \(G(x+ct)\) is a potential solution. Initial conditions are used to specify how a PDE "starts" in time, and boundary conditions determine the value of the solution at the boundaries of the region where the PDE is defined.

Types of boundary conditions

Dirichlet boundary conditions prescribe a fixed value of the solution at a particular point on the boundary of the domain. Neumann boundary conditions, on the other hand, prescribe the rate of change of the solution at a particular point on the boundary. There are also mixed boundary conditions, which involve both Dirichlet and Neumann conditions, and Robin boundary conditions, which involve a linear combination of the solution and its derivatives at the boundary.The study of PDEs is in itself split into many broad fields. Briefly, these are two other important properties in addition to the initial and boundary conditions:

Linearity

- Linear: the highest power of the unknown function appearing in the equation is one (i.e., a linear combination of the unknown function and its derivatives)

- Nonlinear: the highest power of the unknown function appearing in the equation is greater than one

Homogeneity

- Homogeneous: PDEs with no constant terms (i.e., the right-hand side is equal to zero)

- Inhomogeneous: PDEs with a non-zero constant term on the right-hand side

PDEs can be either linear or nonlinear, homogeneous or inhomogeneous, and can contain a combination of constant coefficients and variable coefficients. They can also involve a variety of boundary conditions, such as Dirichlet, Neumann, and Robin conditions, and can be solved using analytical, numerical, or semi-analytical methods

Brandstetter et al.

\(\partial_{t} \mathbf{u} + \nabla \cdot \mathbf{J}(\mathbf{u}) = 0\)

-

\(J\) is the flux, or the amount of some quantity that is flowing through a region at a given time

-

\(\nabla \cdot J\) is the divergence of the flux, or the amount of outflow of the flux at a given point

Additionally, they consider Dirichlet and Neumann boundary conditions.

Solving PDEs the classical way

A brief search in a library will find numerous books detailing how to solve various types of PDEs.

Analytical methods: an exact solution to a PDE can be found by mathematical means

- Separation of Variables

- This method involves expressing the solution as the product of functions of each variable, and then solving each function individually. It is mainly used for linear PDEs that can be separated into two or more ordinary differential equations.

- Green's Functions

- This method involves expressing the solution in terms of a Green's function, which is a particular solution to a homogeneous equation with specified boundary conditions.

Semi-analytical methods: an analytical solution is combined with numerical approximations to find a solution

- Perturbation methods

- This method is used when the solution to a PDE is close to a known solution or is a small deviation from a known solution. The solution is found by making a perturbation to the known solution and solving the resulting equation analytically.

- Asymptotic methods

- In this method, the solution is represented as a series of terms that are solved analytically. The solution is then approximated by taking the leading terms of the series.

Very few PDEs have analytical solutions, so numerical methods have been developed to approximate PDE solutions over a wider range of potential problems.

Numerical Methods

Often, approaches for temporal PDEs follow the method of lines (MOL).

Every point of the discretization is then thought of as a separate ODE evolving in time, enabling the use of ODE solvers such as Runge-Kutta methods.

1. Discretizing the problem

In the most basic case (a regular grid), arbitrary spatial and temporal resolutions \(\mathbf{n_{x}}\) and \(n_{t}\) can be chosen and thus used to create a grid where \(\mathbf{n_{x}}\) is a vector containing a resolution for each spatial dimension.

The domain may also be irregularly sampled, resulting in a grid-free discretization. This is often the case with real-world data that comes from scattered sensors, for example.

FDM or any other time discretization technique can be used to discretize the time domain.

One direction of ongoing research seeks to determine discretization methods which can result in more efficient numerical solvers (for example, take larger steps in flatter regions and smaller steps in rapidly changing regions).

2. Estimating the spatial derivatives

A popular choice when using a gridded discretization is the finite difference method (FDM). Spatial derivative operators are replaced by a stencil which indicates how values at a finite set of neighboring grid points are combined to approximate the derivative at a given position. This stencil is based on the Taylor series expansion.

The finite volume method (FVM) is another approach which works for irregular geometries. Rather than requiring a grid, the computation domain can be divided into discrete, non-overlapping control volumes used to compute the solution for that portion

While this method only works for conservation form equations, it can handle complex problems with irregular geometries and fluxes that are difficult to handle with other numerical techniques such as the FDM.

In the pseudospectral method (PSM), PDEs are solved pointwise in physical space by using basis functions to approximate the spatial derivatives

It is well-suited for solving problems with smooth solutions and periodic boundary conditions, but its performance drops for irregular or non-smooth solutions.

3. Time updates

The resulting problem is a set of temporal ODEs which can be solved with classical ODE solvers such as any member of the Runge-Kutta method family.

Limitations of Classical Methods

The properties of a PDE, such as its order, linearity, homogeneity, and boundary conditions, determine its solution method. Different methods have been developed based on the different properties and requirements of the problem at hand. Brandstetter at al. categorizes these requirements into the following

| User | Structural | Implementational |

|---|---|---|

| Computation efficiency, computational cost, accuracy, guarantees (or uncertainty estimates), generalization across PDEs | Spatial and temporal resolution, boundary conditions, domain sampling regularity, dimensionality | Stability over long rollouts, preservation of invariants |

The countless combinations of requirements resulted in what Bartels defines as a splitter field

These methods, while effective and mathematically proven, often come at high computation costs. Taking into account that PDEs often exhibit chaotic behaviour and are sensitive to any changes in their parameters, re-running a solver every time a coefficient or boundary condition changes in a single PDE can be computationally expensive.

Courant–Friedrichs–Lewy (CFL) condition

According to this condition, as the number of dimensions increases, the size of the temporal step must decrease and therefore numerical solvers become very slow for complex PDEs.

Neural Solvers

Neural solvers offer some very desirable properties that may serve to unify some of this splitter field. Neural networks can learn and generalize to new contexts such as different initial/boundary conditions, coefficients, or even different PDEs entirely

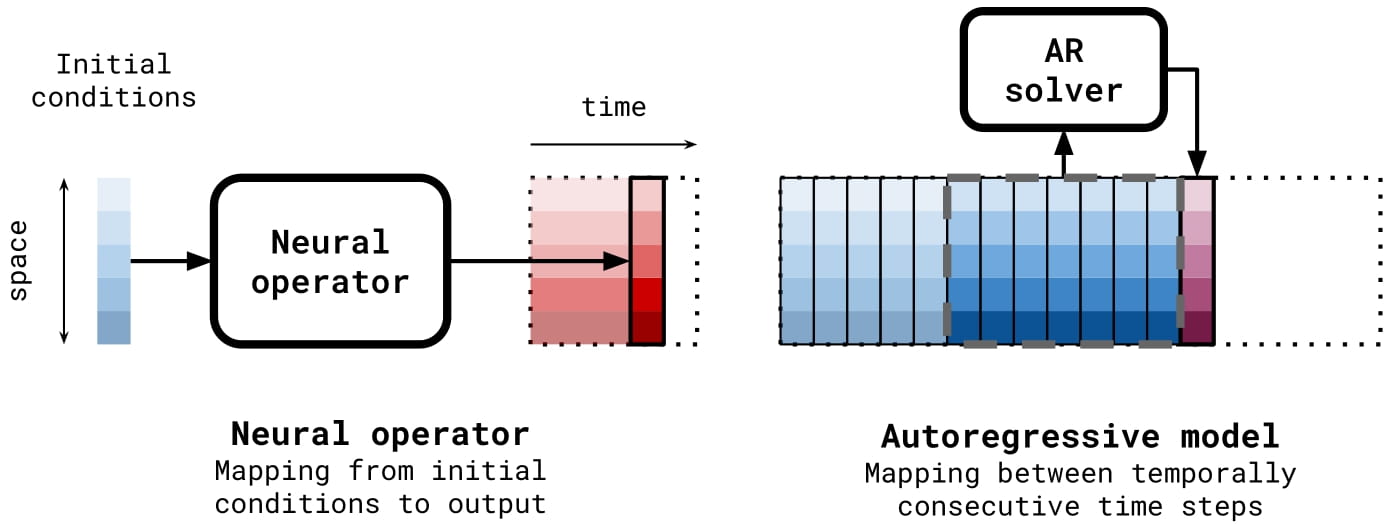

Neural operator methods

Neural operator methods model the solution of a PDE as an operator that maps inputs to outputs. The problem is set such that a neural operator \(\mathcal{M}\) satisfies \(\mathcal{M}(t,\mathbf{u}^{0}) = \mathbf{u}(t)\) where \(\mathbf{u}^{0}\) are the initial conditions

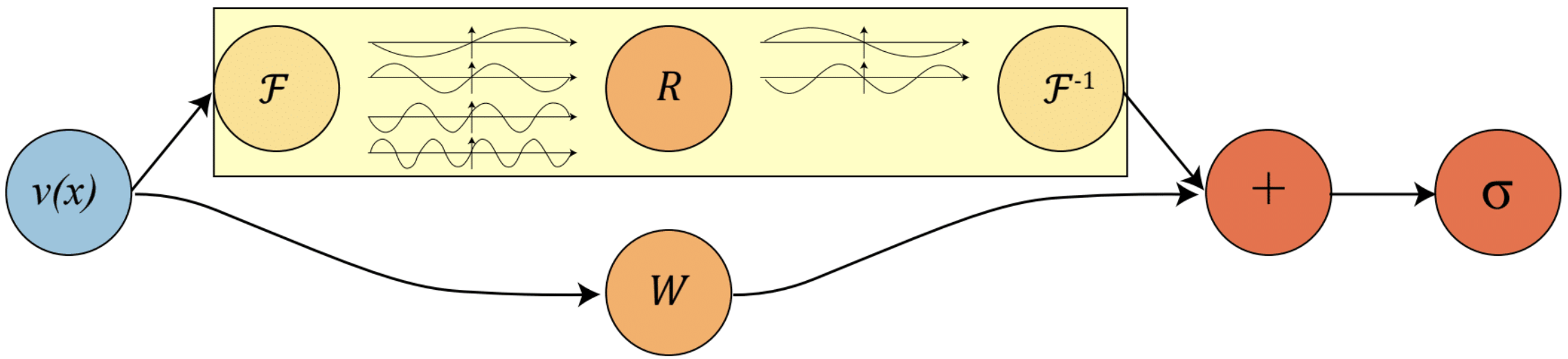

One of the current state-of-the-art models is the Fourier Neural Operator (FNO)

These global integral operators (implemented as Fourier space convolutional operators) are combined with local nonlinear activation functions, resulting in an architecture which is highly expressive yet computationally efficient, as well as being resolution-invariant.

While the vanilla FNO required the input function to be defined on a grid due to its reliance on the FFT, further work developed mesh-independent variations as well

Convolution Theorem

The Fourier transform of the convolution of two signals is equal to the pointwise product of their individual Fourier transforms

Neural operators are able to operate on multiple domains and can be completely data-driven.

However, these models do not tend to predict out-of-distribution \(t\) and are therefore limited when dealing with temporal PDEs. Another major barrier is their relative lack of interpretability and guarantees compared to classical solvers.

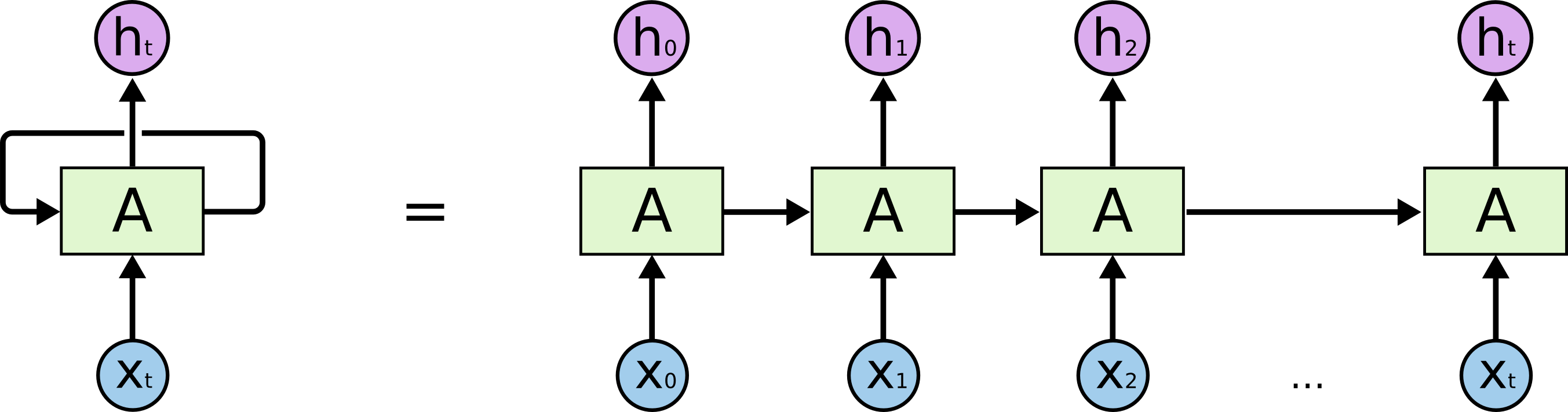

Autoregressive methods

While neural operator methods directly mapped inputs to outputs, autoregressive methods take an iterative approach instead. For example, iterating over time results in a problem such as \(\mathbf{u}(t+\Delta t) = \mathcal{A}(\Delta t, \mathbf{u}(t))\) where \(\mathcal{A}\) is some temporal update

Three autoregressive works mentioned by Brandstetter et al. are hybrid methods which use neural networks to predict certain parameters for finite volume, multigrid, and iterative finite elements methods. All three retain a (classical) computation grid which makes them somewhat interpretable

Other autoregressive models include PixelCNN for images, WaveNet for audio, and the Transformer for text.

However, autoregressive models have not gained the acclaim seen by neural operators as a whole.

This is on one hand due to their limitations in generalization. In Hsieh et al.’s case, an existing numerical method must be used to craft a complementary neural iterator

Another major concern is the accumulation of error, which is particularly detrimental for PDE problems that often exhibit chaotic behavior

Message Passing Neural PDE Solver (MP-PDE)

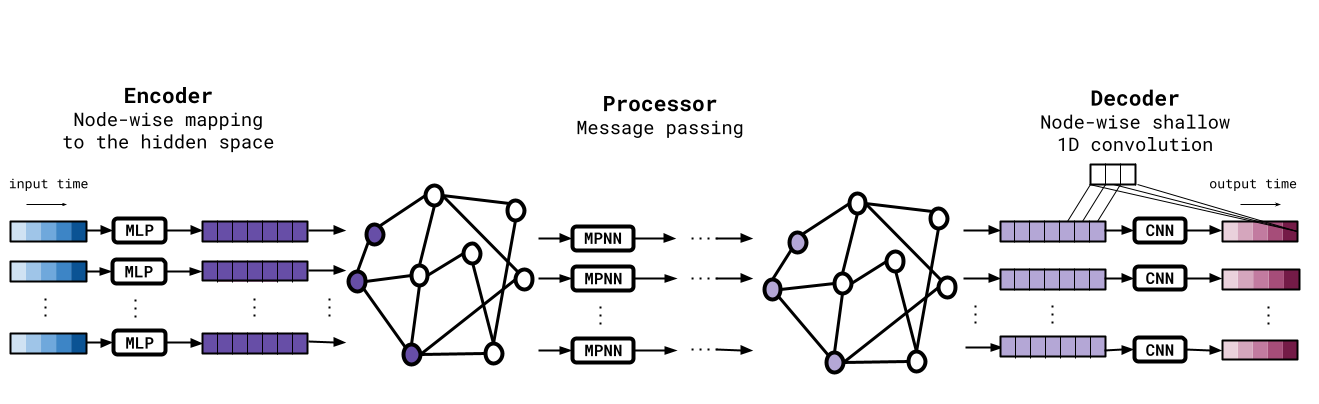

Brandstetter et al. propose a fully neural PDE solver which capitalizes on neural message passing. The overall architecture is laid out below, consisting of an MLP encoder, a GNN processor, and a CNN decoder.

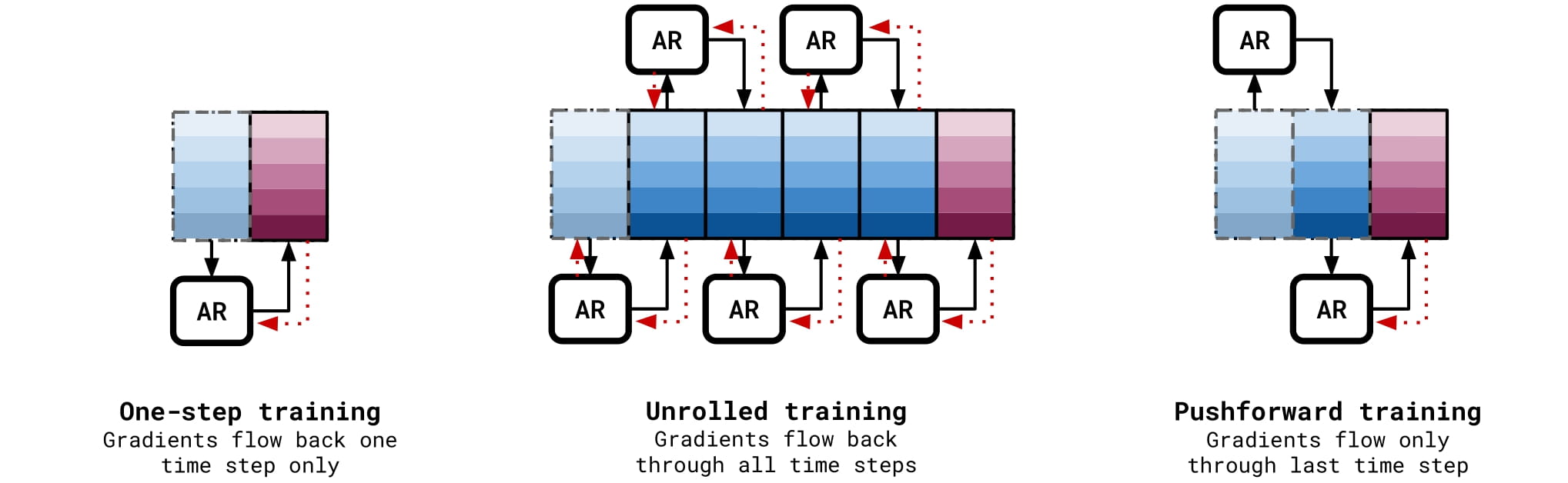

At its core, this model is autoregressive and thus faces the same challenge listed above. Two key contributions of this work are the pushforward trick and temporal bundling which mitigate the potential butterfly effect of error accumulation

The Pushforward Trick and Temporal Bundling

During testing, the model uses current time steps (first from data, then from its own predictions) to approximate the next time step.

This results in a distribution shift problem because the inputs are no longer solely from ground truth data: the distribution learned during training will always be an approximation of the true data distribution. The model will appear to overfit to the one-step training distribution and perform poorly the further it continues to predict.

An adversarial-style stability loss is added to the one-step loss so that the training distribution is brought closer to the test time distribution

\(L_{\text{one-step}} =\) \(\mathbb{E}_{k}\) \(\mathbb{E}_{\mathbf{u^{k+1}|\mathbf{u^{k},\mathbf{u^{k} \sim p_{k}}}}}\) \([\) \(\mathcal{L}\) \((\) \(\mathcal{A}(\mathbf{u}^{k})\) \(,\) \(\mathbf{u}^{k+1}]\)

The loss function is used to evaluate the difference between the temporal update and the expected next state, and the overall one-step loss is calculated as the expected value of this loss over all time-steps and all possible next states.

\(L_{\text{stability}} = \mathbb{E}_{k}\mathbb{E}_{\mathbf{u^{k+1}|\mathbf{u^{k},\mathbf{u^{k} \sim p_{k}}}}}[\mathbb{E}_{\epsilon | \mathbf{u}^{k}} [\mathcal{L}(\mathcal{A}(\mathbf{u}^{k}+\) \(\epsilon\) \()),\mathbf{u}^{k+1}]]\)

\(L_{\text{total}} = L_{\text{one-step}} + L_{\text{stability}}\)

The stability loss is largely based off the one-step loss, but now assumes that the temporal update uses noisy data.

The pushforward trick lies in the choice of \(\epsilon\) such that \(\mathbf{u}^{k}+\epsilon = \mathcal{A}(\mathbf{u}^{k-1})\), similar to the test time distribution. Practically, it is implemented to be noise from the network itself so that as the network improves, the loss decreases.

Necessarily, the noise of the network must be known or calculated to implement this loss term. So, the model is unrolled for 2 steps but only backpropagated over the most recent unroll step, which already has the neural network noise

While the network could be unrolled during training, this not only slows the training down but also might result in the network learning shortcuts across unrolled steps.

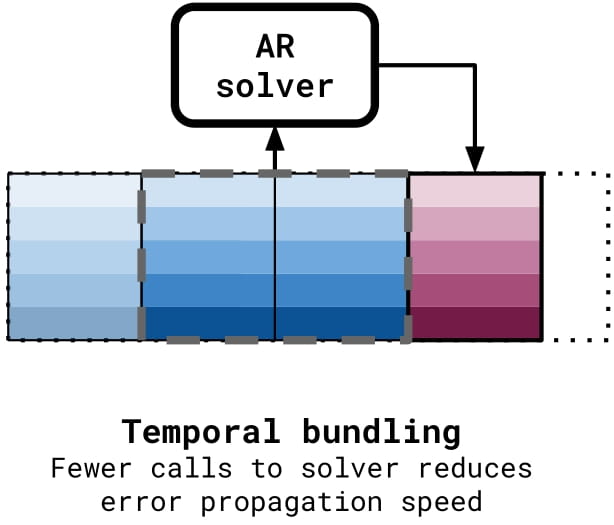

Temporal bundling

This trick complements the previous by reducing the amount of times the test time distribution changes. Rather than predicting a single value at a time, the MP-PDE predicts multiple time-steps at a time, as seen above

Network Architecture

GNNs have been used as PDE solvers in a variety of works

| Classical Numerical Method | MP-PDE Network Component |

|---|---|

| Partitioning the problem onto a grid | Encoder Encodes a vector of solutions into node embeddings |

| Estimating the spatial derivatives | Processor Estimates spatial derivatives via message passing |

| Time updates | Decoder Combines some representation of spatial derivatives smoothed into a time update |

- Encoder

The encoder is implemented as a two-layer MLP which computes an embedding for each node \(i\) to cast the data to a non-regular integration grid:

\(\mathbf{f}_{i}^{0} = \epsilon^{v}([\mathbf{u}_{i}^{k-K:k},\mathbf{x}_{i},t_{k},\theta_{PDE}])\)

where \(\mathbf{u}_{i}^{k-K:k}\) is a vector of previous solutions (the length equaling the temporal bundle length), \(\mathbf{x}_{i}\) is the node's position, \(t_{k}\) is the current timestep, and \(\theta_{PDE}\) holds equation parameters. - Processor

The node embeddings from the encoder are then used in a message passing GNN. The message passing algorithm, which approximates spatial derivatives, is run \(M\) steps using the following updates:

\(\text{edge } j \to i \text{ message:} \qquad \mathbf{m}_{ij}^{m} =\) \(\phi\) \((\) \(\mathbf{f}_{i}^{m}, \mathbf{f}_{j}^{m},\) \(\mathbf{u}_{i}^{k-K:k}-\mathbf{u}_{j}^{k-K:k}\), \(\mathbf{x}_{i}-\mathbf{x}_{j}\), \(\theta_{PDE}\) \())\)

The difference in spatial coordinates helps enforce translational symmetry and, combined with the difference in node solutions, relates the message passing to a local difference operator. The addition of the PDE parameters is motivated by considering what the MP-PDE should generalize over: by adding this information in multiple places, flexibility can potentially be learned since all this information (as well as the node embeddings) is fed through a two-layer MLP. In addition, the solution of a PDE at any timestep must respect the boundary condition (the same as in classical methods for BVPs), so adding the PDE parameters in the edge update provides knowledge of the boundary conditions to the neural solver.

\(\text{node } i \text{ update:} \qquad\) \(\mathbf{f}_{i}^{m+1}\) \(=\) \(\psi\) \((\) \(\mathbf{f}^{m}_{i}\), \(\sum_{j \in \mathcal{N}(i)} \mathbf{m}_{ij}^{m}\), \(\theta_{PDE}\) \()\)

The future node embedding is updated using the current node embedding, the aggregation of all received messages, and (again) the PDE parameters. This information is also fed through a two-layer MLP.

Bar-Sinai et al. explores the relationship between FDM and FVM as used in the method of lines

. In both methods, the \(n^{th}\) order derivative at a point \(x\) is approximated by \(\partial^{(n)}_{x}u \approx \sum_{i} a^{(n)}_{i} u_{i}\)

for some precomputed coefficients \(a^{(n)}_{i}\). The right hand side parallels the message passing scheme, which aggregates the local difference (\(\mathbf{u}_{i}^{k-K:k}-\mathbf{u}_{j}^{k-K:k}\) in the edge update) and other (learned) embeddings over neighborhoods of nodes.

This relationship gives an intuitive understanding of the message passing GNN, which mimics FDM for a single layer, FVM for two layers, and WENO5 for three layers

. WENO5 is a numerical interpolation scheme used to reconstruct the solution at cell interfaces in FVM. While the interpretation is desirable, how far this holds in the actual function of the MP-GNN is harder to address. The concepts of the nodes as integration points and messages as local differences break down as the nodes and edges update. In addition, the furthest node that contributes a message from for any point is at \(n\) edges away for the \(n^{th}\) layer (or a specified limit). This results in a very coarse and potentially underinformed approximation for the first layer which is then propagated to the next layers. However, both the updates use two layer MLPs which (although abstracting away from their respective interpretations) may in effect learn optimal weightings to counterbalance this.

- Decoder

The approximated spatial derivatives are then combined and smoothed using a CNN which outputs a bundle of next time steps (recall temporal bundling) \(\mathbf{d}_{i}\). The solution is then updated:

\(\mathbf{u}^{k+l}_{i} = u^{k}_{i} + (t_{k+l}-t_{k})\mathbf{d}^{l}_{i}\)

Some precedence is seen, for example, in classical linear multistep methods which (though effective) face stability concerns. Since the CNN is adaptive, it appears that it avoids this issue

.

Results

Quantitative measures: accumulated error, runtime

Accumulated error: \(\frac{1}{n_{x}} \sum_{x,t} MSE\)

Runtime (s): Measured time taken to run for a given number of steps.

As a general neural PDE solver, the MP-GNN surpasses even the current state-of-the-art FNO.

For example, after training a neural model and setting up an instance of MOL, this is a brief comparison of how they can generalize without re-training.

| Generalization to... | MP-GNN | FNO | Classical (MOL) |

|---|---|---|---|

| New PDEs | Yes | No | No |

| Different resolutions | Yes | Yes | No (unless downsampling) |

| Changes in PDE parameters | Yes | Yes | Sometimes |

| Non-regular grids | Yes | Some | Yes (dependent on implementation) |

| Higher dimensions | Yes | No | No |

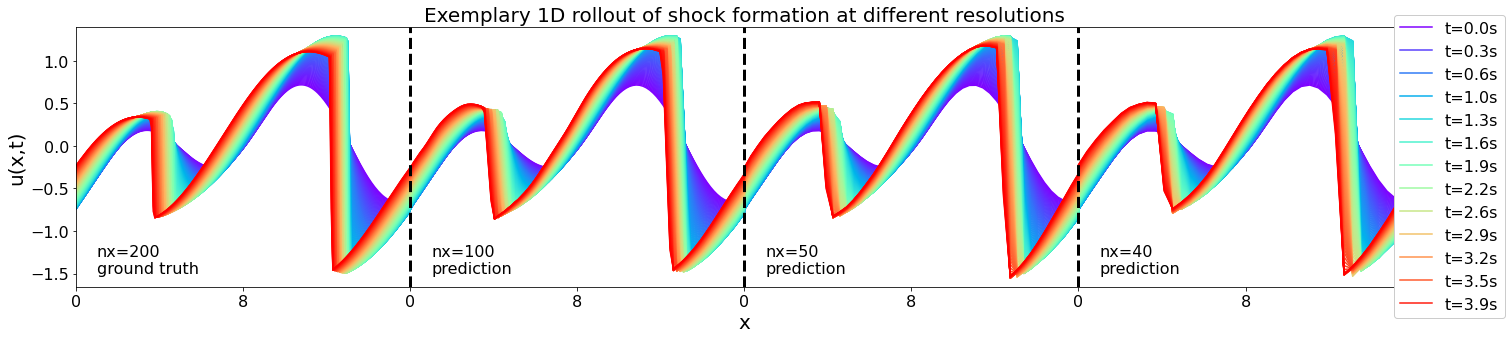

This experiment exemplifies the MP-PDE’s ability to model shocks (where both the FDM and PSM methods fail) across multiple resolutions. Even at a fifth of the resolution of the ground truth, both the small and large shocks are captured well.

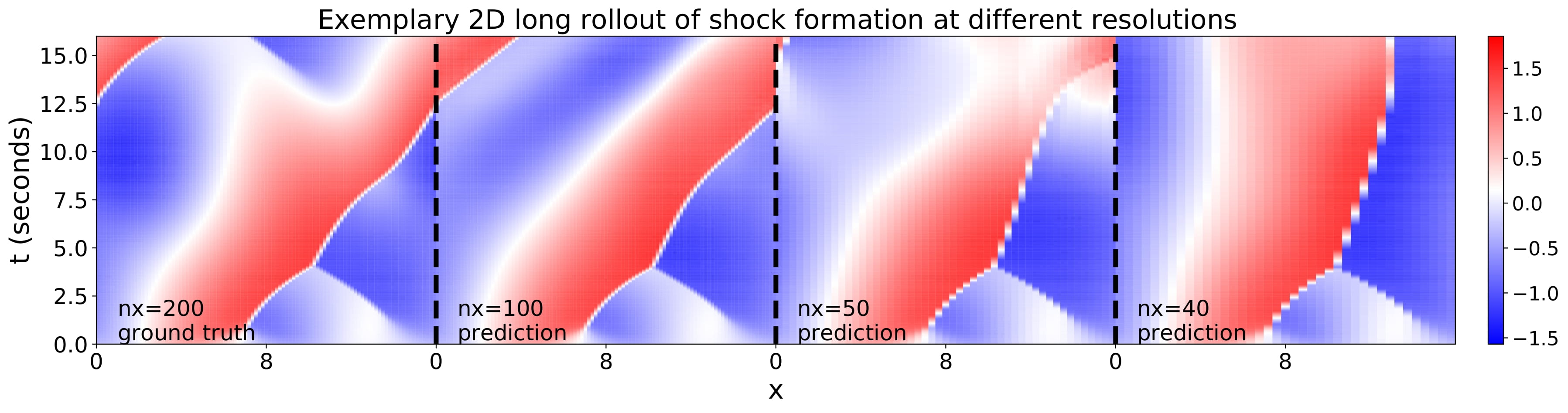

The same data is displayed in 2D to show the time evolution. After about 7.5s, the error accumulation is large enough to visibly diverge from the ground truth. The predictions become unreliable due to error accumulation.

In practice, this survival time should be empirically found (as seen here) to determine for how long the solution is reliable. However, the ground truth would be needed for comparison, rendering this as another chicken-egg problem.

| Accumulated Error | Runtime [s] | ||||||

|---|---|---|---|---|---|---|---|

| \(\quad (n_{t},n_{x})\) | WENO5 | FNO-RNN | FNO-PF | MP-PDE | WENO5 | MP-PDE | |

| E1 | (250,100) | 2.02 | 11.93 | 0.54 | 1.55 | 1.9 | 0.09 |

| E1 | (250, 50) | 6.23 | 29.98 | 0.51 | 1.67 | 1.8 | 0.08 |

| E1 | (250, 40) | 9.63 | 10.44 | 0.57 | 1.47 | 1.7 | 0.08 |

| E2 | (250, 100) | 1.19 | 17.09 | 2.53 | 1.58 | 1.9 | 0.09 |

| E2 | (250, 50) | 5.35 | 3.57 | 2.27 | 1.63 | 1.8 | 0.09 |

| E2 | (250, 40) | 8.05 | 3.26 | 2.38 | 1.45 | 1.7 | 0.08 |

| E3 | (250, 100) | 4.71 | 10.16 | 5.69 | 4.26 | 4.8 | 0.09 |

| E3 | (250, 50) | 11.71 | 14.49 | 5.39 | 3.74 | 4.5 | 0.09 |

| E3 | (250, 40) | 15.97 | 20.90 | 5.98 | 3.70 | 4.4 | 0.09 |

Abbreviations

| Shorthand | Meaning |

|---|---|

| E1 | Burgers' equation without diffusion |

| E2 | Burgers' equation with variable diffusion |

| E3 | Mixed equation, see below |

| \(n_{t}\) | Temporal resolution |

| \(n_{x}\) | Spatial resolution |

| WENO5 | Weighted Essentially Non-Oscillatory (5th order) |

| FNO-RNN | Recurrent variation of FNO from original paper |

| FNO-PF | FNO with the pushforward trick added |

| MP-PDE | Message passing neural PDE solver |

The authors form a general PDE in the form

\([\partial_{t}u + \partial_{x}(\alpha u^{2} - \beta \partial_{x} u + \gamma \partial_{xx} u)](t,x) = \delta (t,x)\)

\(u(0,x) = \delta(0,x)\)

such that \(\theta_{PDE} = (\alpha, \beta, \gamma)\) and different combinations of these result in the heat equation, Burgers' equation, and the KdV equation. \(\delta\) is a forcing term, allowing for greater variation in the equations being tested.

For this same experiment, the error and runtimes were recorded when solving using WENO5, the recurrent variant of the FNO (FNO-RNN), the FNO with the pushforward trick (FNO-PF), and the MP-PDE.

The pushforward trick is successful in mitigating error accumulation.

Comparing the accumulated errors of FNO-RNN and the FNO-PF across all experiments highlights the advantage of the pushforward trick. While the MP-PDE outperforms all other tested methods in the two generalization experiments E2 and E3, the FNO-PF is most accurate for E1.

When solving a single equation, the FNO likely performs better, though both FNO-PF and MP-PDE methods outperform WENO5.

Neural solvers are resolution-invariant.

As \(n_{x}\) is decreased, WENO5 performs increasingly worse whereas all the neural solvers remain relatively stable.

Neural solver runtimes are constant to resolution.

Additionally, the runtimes of WENO5 decrease (likely proportionally) since fewer steps require fewer calculations, but the MP-PDE runtimes again appear relatively stable.

Conclusion

Future Directions

The authors conclude by discussing some future directions.

For example, the MP-PDE can be modified for PDE retrieval (which they call parameter optimization). There is some precedence for this: Cranmer et al. develop a method which fits a symbolic regression model (eg.: PySR, eureqa) to the learned internal functions of a GNN

Adaptive time stepping is another avenue which could make the model more efficient and accurate by taking large steps over stable/predictable solution regions and smaller steps over changing/unpredictable solution regions. The choice of a CNN for the decoder works well over regular inputs and outputs, but other options like attention-based architectures could potentially weigh the outputted node embeddings such that the model might learn different time steps. Some care would have to be taken with temporal bundling in this case, since the resulting vectors would be potentially irregular in time.

The one-step loss which is the basis of the adversarial-style loss is also used in reinforcement learning, which frequently uses deep autoregressive models. Other formulations which borrow from reinforcement learning (where distribution shifts are quite common) and other fields could prove successful as well. Transformer-based natural language processing are now capable of capturing extremely long sequence dependencies and generating coherent long-form text. Since Transformers are GNNs which use attention to aggregate neighborhoods, this may be a viable avenue to explore.

One more potential direction is inspired by the recent GRAND paper.

Brandstetter et al. emphasizes the value of relationships to classical solvers - in fact, this is one of the key benefits of hybrid autoregressive models. However, modeling continuous functions as in neural operator models typically outperforms their competitors. Even the MP-PDE is fully neural, making it less explanable than the hybrid autoregressive models introduced earlier.

The Graph Neural Diffusion (GRAND) model introduced by Chamberlain et al. demonstrates that GNN can be crafted using differential equations (like diffusion processes) where, similarly to Brandstetter et al., the spatial derivative is analogous to the difference between node features

Rather than “representationally [containing] some classical methods”

For example, standard MP-GNNs are shown to be equivalent to the explicit single-step Euler scheme; other classical solvers result in different flavours of message passing. Using GRAND to extend the MP-PDE would require rethinking the encoder and decoder, but the potential benefit could result in more reliability and therefore wider adoption of neural solvers for real world applications.

Remarks

In their paper “Message Passing Neural PDE Solver”, Brandstetter at al. present a well-motivated neural solver based on the principle of message passing. The key contributions are the end-to-end network capable of one-shot generalization, and the mitigation of error accumulation in autoregressive models via temporal bundling and the pushforward trick. Note that the latter are self-contained can be applied to other architectures (as in the FNO-PF), providing a valuable tool to improve autoregressive models.